SAIL (formerly VAS) Group has a paper on robust explanations accepted at AAMAS23

Francesco Leofante, a Research Fellow in the Department of Computing and a former Research Associate in the SAIL (formerly VAS) group, has had a paper accepted at the 22nd International Conference on Autonomous Agents and Multiagent Systems (AAMAS23):

F. Leofante, A. Lomuscio. Towards Robust Contrastive Explanations for Human-Neural Multi-Agent Systems (extended abstract). Proceedings of the 22nd International Conference on Autonomous Agents and Multiagent Systems (AAMAS23). London, UK. IFAAMAS Press.

We caught up with Francesco to get an idea about his work and how it contributes to research in the area of Robust Contrastive Explanations.

Could you quickly summarise what the paper is about?

Our paper deals with robustness issues of counterfactual explanations in neural agents. Counterfactuals are typically used to offer recourse recommendations. Imagine, for example, a system which decides whether an application for a loan should be accepted or rejected. If it is rejected, the person who applied for that loan might ask themselves what they can do to have their application accepted.

Counterfactual explanations can be useful to provide more insights into the decision making of such a system. They are computed as perturbations to the input such that the classification flips - in our example, what would have to change about the loan application for it to be accepted instead of rejected. This perturbation is used to generate recourse recommendations (e.g. increase salary by 10000 and the loan application will be accepted).

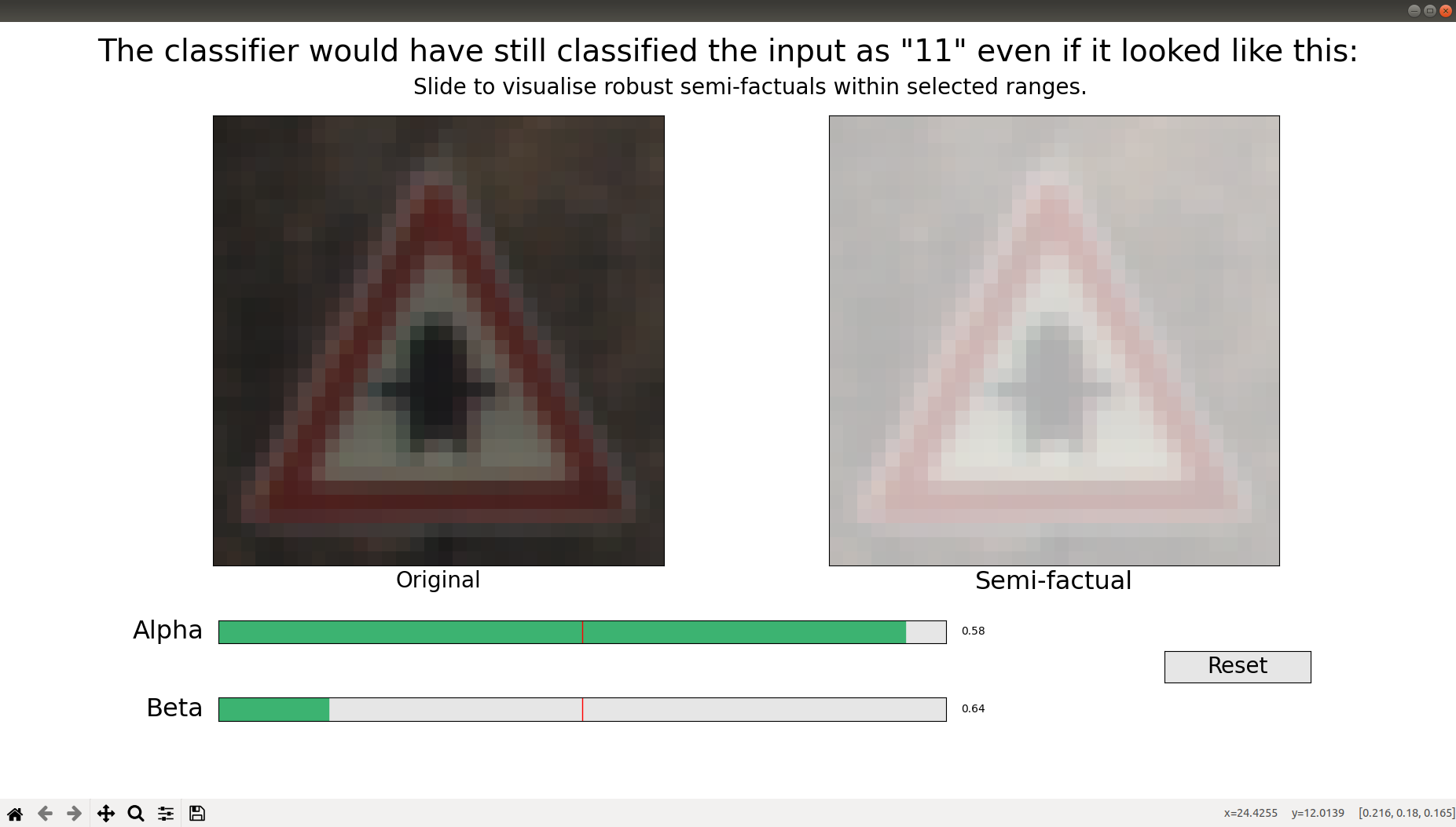

When implementing the recommendation, the user might not follow it exactly. Imagine they increase their salary by 12000 instead of 10000 (because they assume that the higher the salary the better). This deviation from the original recommendation invalidates the counterfactual explanation because of the structure of the decision boundaries in the neural agent and the loan might be rejected again. Robust counterfactual explanations can help in such a situation - a robust explanation would tolerate deviations from the recommendation to a certain degree. Besides this, we also deal with semifactual explanations in the paper.

How do you advance the state of the art in the area and what are your contributions?

We are the first to propose an appraoch that comes with exhaustive (formal) guarantees on the robustness.

Judging by the example you gave this seems to not only be a theoretical method. Are there any practical applications where this is relevant or that are unlocked by your progress?

In our work we test the developed method on loan application datasets from the FICO competition. This is real data and allows us to evaluate the algorithm focused on counterfactuals. Another benchmark we use are traffic sign recognition tasks. Here, we make sure that an image classifier network correctly classifies a traffic sign and we can provide guarantees that it will continue to do so even when photometric perturbations are applied. If classification fails, we generate an explanation detailing what caused that failure.

That sounds very relevant indeed! So what are future directions of research that you’d like to pursue in that area?

We plan to investigate whether similar techinques based on verification can be extended to account for other notions of robustness within Explainable AI.