SAIL (formerly VAS) Group has a paper on verification-friendly networks accepted at IJCNN23

Patrick Henriksen, a PhD Student in the SAIL (formerly VAS) Group and Francesco Leofante, a Research Fellow in the Department of Computing and a former Research Associate in the SAIL (formerly VAS) group, have had a paper accepted at the 36th International Conference on Neural Networks (IJCNN23):

P. Henriksen, F. Leofante, A. Lomuscio. Verification-friendly Networks: the Case for Parametric ReLUs. Proceedings of the 36th International Conference on Neural Networks (IJCNN23). Gold Coast, Australia. IEEE Press.

We caught up with them to get an idea about their work and how it contributes to research in the area of safe neural networks.

Could you quickly summarise what the paper is about?

Neural Networks are often very brittle and sensitive - a small change in their inputs can then lead to a large output change. This can cause unexpected behaviours which may be problematic when these networks are deployed in safety-critical domains. There is therefore a lot of work on algorithms for formally verifying the robustness of such networks to input perturbations. However, current approaches don’t always scale to state-of-the-art networks which may be very large in size.

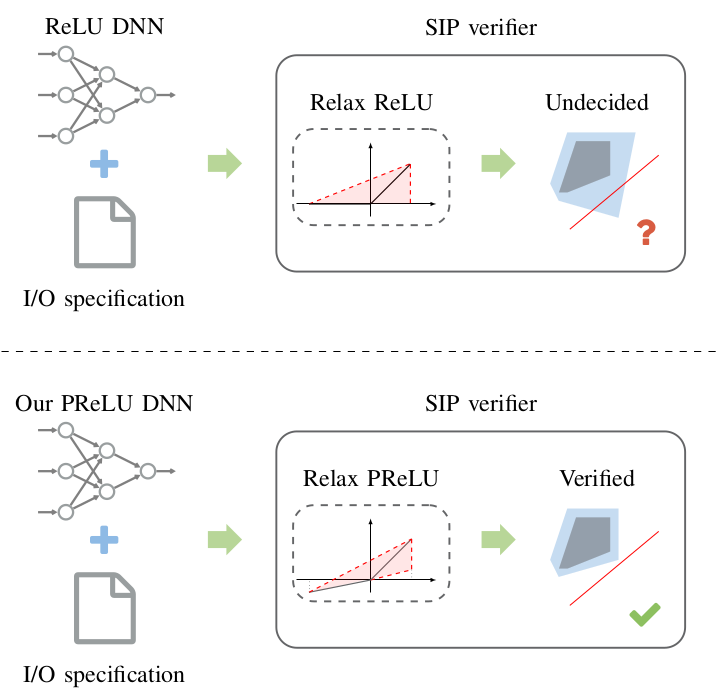

We therefore investigate how to train neural networks that are easier to verify using Symbolic Interval Propagation (SIP), a commonly used verification technique. The challenge is to maintain a high accuracy of the network on its task while also increasing the robustness using our training methods.

How do you advance the state of the art in the area and what are your contributions?

We show that Parametric Rectified Linear Units (Parametric ReLUs) can be used in lieu of standard ReLU activation functions to train networks with high accuracy and robustness that are easier to verify. Our approach is based on a simple regulariser that pushes the Parametric ReLUs towards minimising the over approximation error induced by the commonly used linear relaxations in state-of-the-art verifiers. We show that this approach can further be integrated with adversarial training to boost robustness while maintaining accuracy and verifiability.

Why is this an important advancement - are there any practical applications where this is relevant?

As outlined above, verification is extremely important when AI is being deployed in real-life applications, especially those that are safety-critical. Our approach not only makes it easier to certify that a neural network used in such a scenario is safe but it also improves the robustness of the network. This is highly relevant in a number of areas such as autonomous driving or in the aircraft domain.

Interesting - so what’s next for you and what are future projects you’d like to explore?

Our next aim is to examine the relation between our work and certified/robust training approaches presented in the literature.