SAIL (formerly VAS) Group has a paper on verification against LVM-based specifications accepted at CVPR23

Harleen Hanspal, a PhD Student in the SAIL (formerly VAS) Group, has had a paper accepted at the 36th IEEE Conference on Computer Vision and Pattern Recognition (CVPR23):

H. Hanspal, A. Lomuscio. Efficient Verification of Neural Networks against LVM-based Specifications. Proceedings of the 36th IEEE Conference on Computer Vision and Pattern Recognition (CVPR23). Montreal, Canada. Computer Vision Foundation/IEEE.

We caught up with her to learn about the method presented in the paper and the contribution it makes in the area of neural network safety.

Could you sum up the main ideas of the paper?

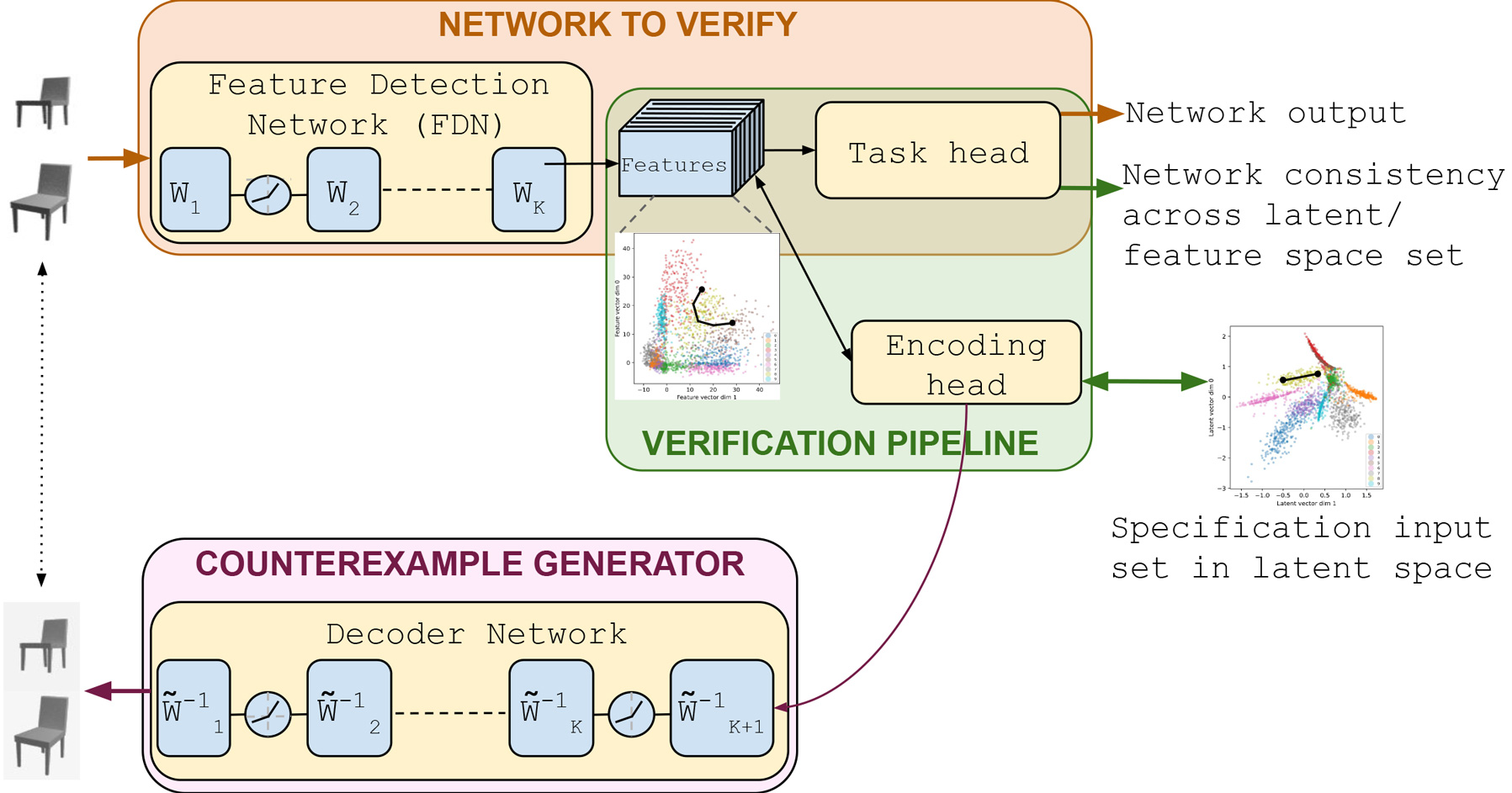

The deployment of perception systems based on neural networks (NNs) in safety critical applications requires an assurance on their robustness, and deterministic guarantees on network robustness require formal verification. However, standard approaches for verifying robustness analyse invariance to analytically defined transformations, but not diverse and ubiquitous changes which are more natural such as changes to object pose, scene, viewpoint or the occurrence of occlusions. To this end, this paper presents an efficient approach for verifying specifications definable using Latent Variable Models that capture such diverse changes. The approach involves adding an invertible encoding head to the network to be verified, enabling the verification of latent space sets with minimal reconstruction overhead.

How does your work advance the state of the art and what are your contributions?

Differently from previous work in this area, our approach is relatively independent of input dimensionality and scales to a broad class of deep network architectures and real-world datasets by mitigating the inefficiency and decoder expressivity dependence in the present state-of-the-art. We demonstrate this through experiments for three classes of proposed latent space specifications, each capturing different types of realistic input variations.

So why is this progress important - are there any practical applications where this is relevant?

The contribution of this work is mainly in the efficient incorporation of more expressive perturbations in the verification framework. Given the diverse nature of perturbations any deployed system encounters in the real world, I would argue that this work is important for verification to be more broadly useful and applicable in the industry. This especially concerns areas where safety or certification is critical such as, autonomous driving or flying.